when is RL useful?

Our experience with reinforcement learning ranges from training a robot to compete in the ‘wire game’ to optimizing the yield of a production process for medical isotopes, and from optimizing strategies for Defense to rapidly restoring a high-tech system to its equilibrium state after a disturbance. Drawing on this experience, we are happy to guide you on your way.

Reinforcement learning is useful when finding an optimal strategy is difficult. For example, if you've tried other traditional methods for process control or optimization and they haven't worked out. Sometimes, the physics of a system is too complex. But there may also simply be too many possible actions and reactions before it becomes clear whether all the chosen actions have worked, which is the case with the game of Go. In all these cases, reinforcement learning could offer a solution, as was proven in the match between Lee Sedol and AlphaGo.

No such thing as a free lunch

However, as Milton Friedman titled his book in 1975: "There is no such thing as a free lunch." The price for reinforcement learning is ‘a huge amount of data’. All this data is needed to arrive at a good solution. Data can come from already available log data of the process that needs to be optimized. But a simulation model could also be a suitable way to generate data. To ultimately gain support for the solutions, the learned strategies should be tested in practice.

starting with reinforcement learning.

By now you are probably wondering how you can work reinforcement learning to your advantage. As already said, reinforcement learning is particularly useful if a business case can be made for optimizing complex strategies and data is available to learn these strategies.

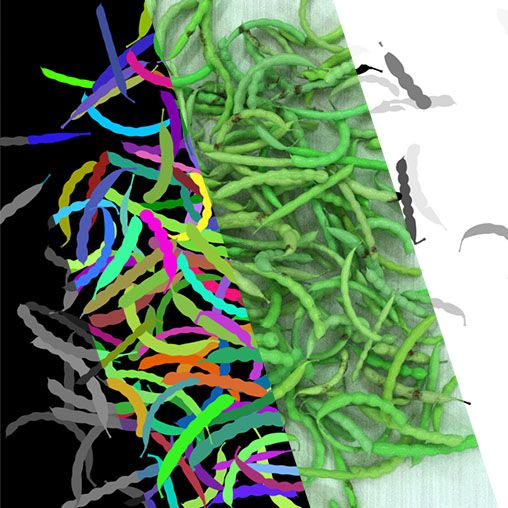

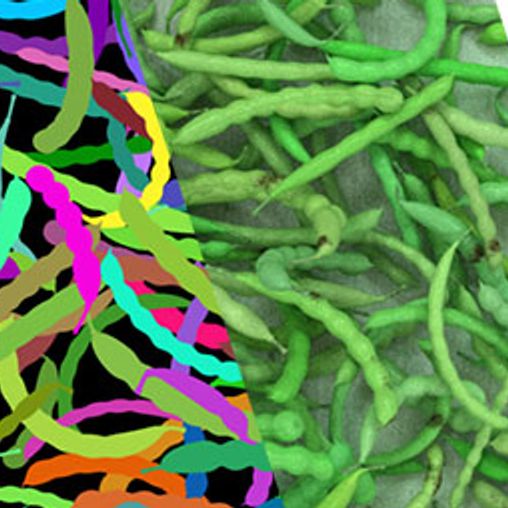

If this step has been taken and a use case has been defined, the elements that constitute a reinforcement learning algorithm have to be defined. As a start, you need to define all possible actions the algorithm can choose from.

Next, the form we use for the optimal strategy has to be described: model-based or tabular. This also has consequences for the possible learning strategies that can be chosen.

These questions need to be answered first based on human knowledge of the problem and the context before we can flip the switch on our computers to start calculating.

where the magic happens: the reward function.

But the most crucial aspect of reinforcement learning is the reward function, which describes how much a chosen strategy ultimately yields. The strength of this reward function is that it very directly defines what ‘optimal’ is. In Go, this is winning a game, not an intermediate evaluation of the respective positions of the players. This allows reinforcement learning to come up with very surprising and creative solutions that many other methods might not find when dealing with complex problems.

In our projects this reward function was crucial in outperforming other learning methods, but also in the development for self-driving cars, the reward function gives the flexibility to optimize for not-colliding in the short term as well as long-term planning in the long term.

Designing a reward function is not always as straightforward as in Go, where you can win or lose, and the number of decisions is limited to an average of 100 moves. Here, given enough examples, the algorithm – or so-called agent, can quite accurately determine what does or does not contribute to winning.

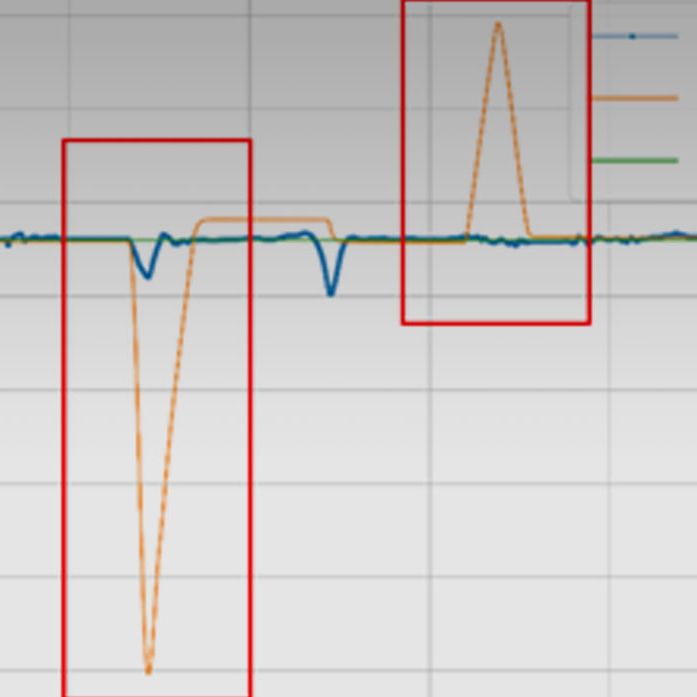

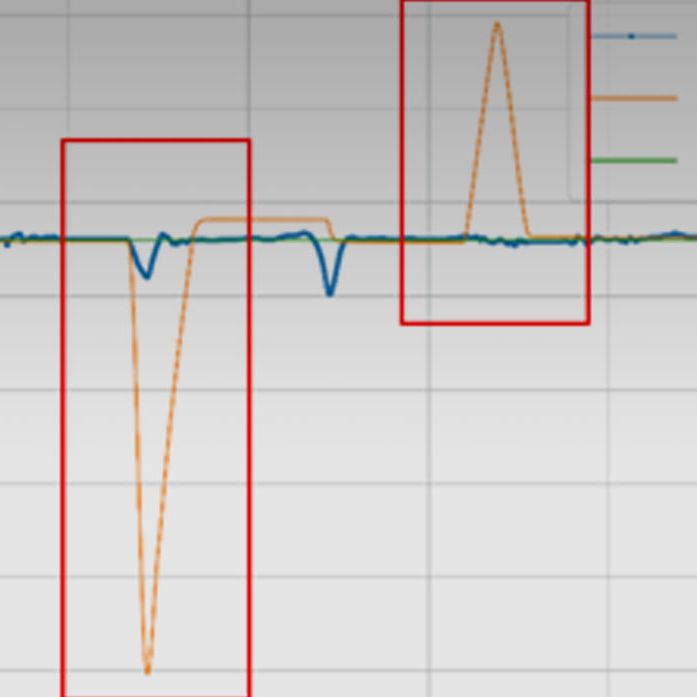

We have worked on projects where we simulated training sessions that lasted ~12 hours. This resulted in thousands of decisions for the agent. Since the agent will not win by chance, it is necessary to provide more mid-term signals of success. Although this is the tricky part; you need to give the agents enough leads for it to be able to make the search for a successful strategy, without forcing a solution that is obvious to humans.

unscrewing the most complex bolts.

Lee Sedol has stopped playing Go for quite some time now: "losing to AI, in a sense, meant my entire world was collapsing." Although we empathize with his sense of loss, we believe that in our case – with Reinforcement Learning, we now have an incredible screwdriver added to our toolbox to also unscrew the most complex bolts.

we love to discuss your particular challenge and if reinforcement learning could help you.