driven

by data.

Shaping solutions by merging data and technology to drive your business together. We balance realism and pragmatism with an open-minded approach toward technological challenges. Our extensive multidisciplinary engineering knowledge allows us to deliver integrated system-level solutions. Our broad domain expertise in a wide range of markets and applications allows us to connect with your challenges quickly. As a partner for aspiring start-ups and established OEMs, we shape innovative concepts into qualified data-driven solutions.

the challenges we solve.

explore everything we have to offer.

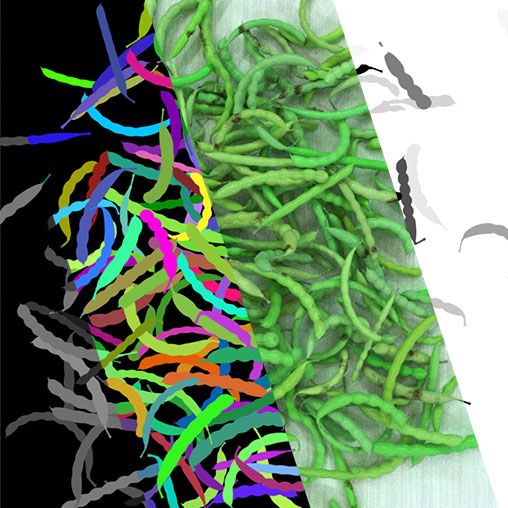

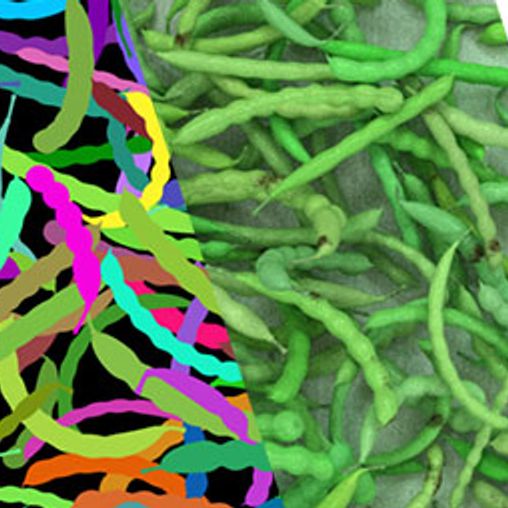

synthetic data

Generate near-infinite permutations of complex, domain-specific, 3D environments

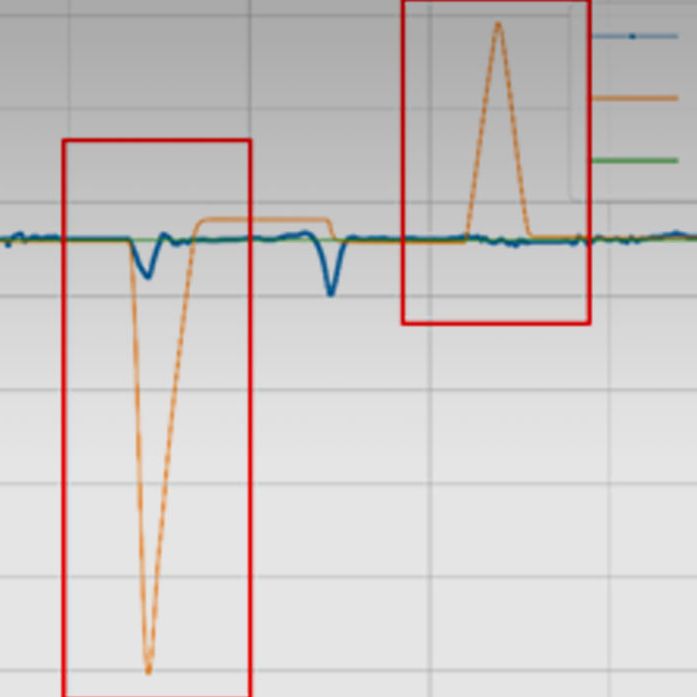

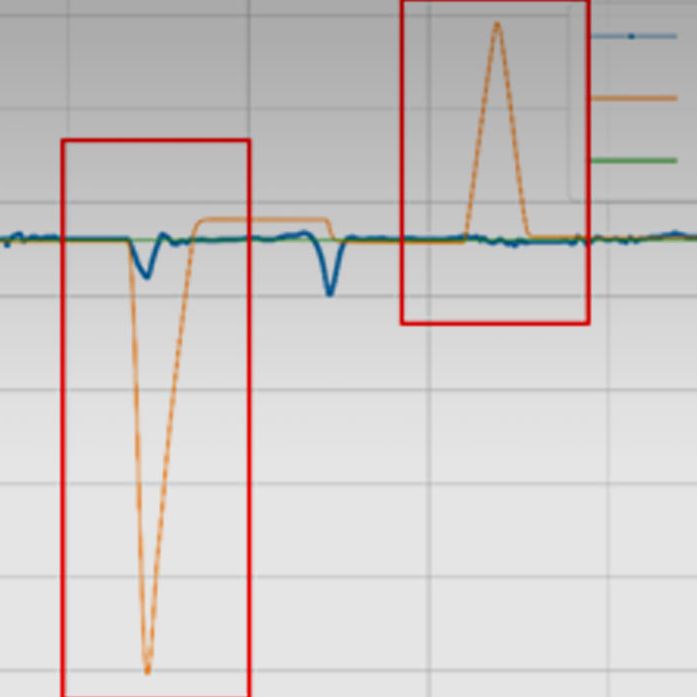

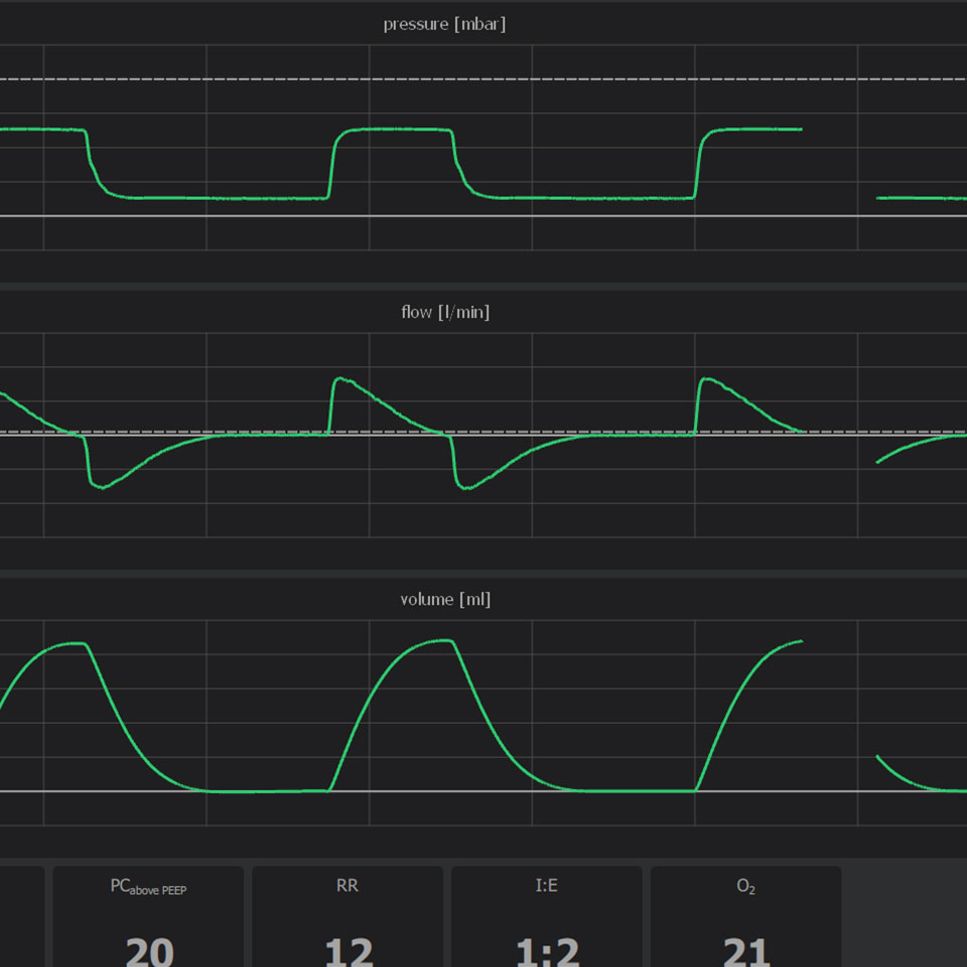

time series analysis

Increase functionality by actionable knowledge extraction

machine vision

More robust machine vision applications

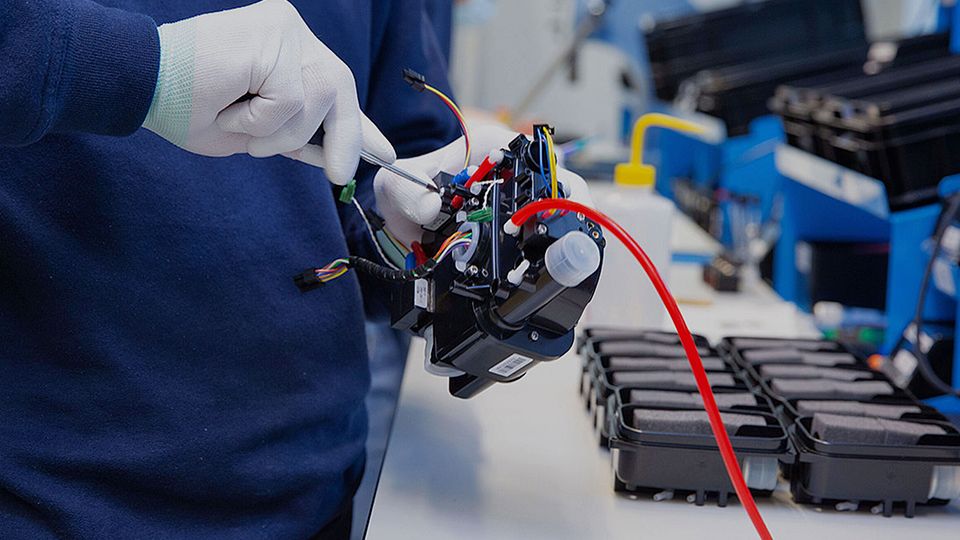

machine health monitoring

Optimize machine operation quality

reinforcement learning based control

Optime control and machine performance